Posts Tagged Lucene

Fixing 7digital’s Music Search, Part one: The Gargantuan Index

I’m Michael Okarimia, and since January 2014 I’ve been in role of Lead Developer of the Content Discovery Team at 7digital. The Content Discovery team recently improved the catalogue search infrastructure for the 7digital API. This is the first of a series of posts which describes how we made 7digital’s music search usable for our users and clients.

This is a three part series of posts which describes the work we’ve done on 7digital’s search platform.

This post describes the issues 7digital had with their platform and the second post describes how we improved response times for the problem, and then the third and final post covers how we improved the relevancy of the search results

The Problem with Music Search; The state of play in Jan 2014

Searching for a music track in 7digital’s API is often the first point of contact for accessing its catalogue of 27 million tracks. The API that 7digital provide has many publicly accessible web services which are reached via a specific URL, which we refer to as API endpoints. To stream or purchase a track, one first needs to know what it’s 7digital track ID is. 7digital track IDs mean nothing to the average end user, so searching for a track based on its track title and/or artist name is a very common method of retrieving a 7digital track ID. The client consuming the API can then use this 7digital track ID to access a the music streaming service on the streaming API endpoints.

The title, artist, price and release date of a track is referred to as track meta data, which is data pertaining to the track, but not in itself the audio file of the track.

The Track search endpoint, as it’s name suggests, accepts search terms as text, and returns in the it’s search results the metadata of any matching tracks.

This is a track/search response from the 7digital API: http://api.7digital.com/1.2/track/search?q=Happy%20pharrell&oauth_consumer_key=YOUR_KEY_HERE&country=GB&pagesize=2

It was powered by web servers which sent its search requests a SOLR web application. SOLR provides http access to a Lucene search index, which is a very widely used piece of text search software. Some examples where Lucene is used are for searching for words that exist in a collection or books in a library. Many people will be familiar with a search engine on Twitter or Wikipedia, which uses Lucene to search for specific pieces of text in a massive corpus of text.

The two biggest problems were:

- In January 2014 the average ~/track/search response time was 4000 milliseconds

- Search results often were wrong, out of date, or would return errors.

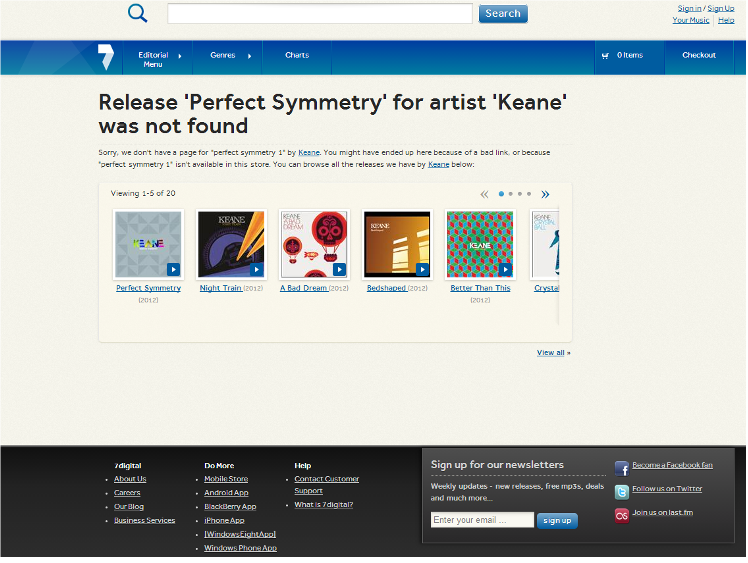

In addition to being slow, search results would often contain releases that were no longer available or had been updated. This occurrence was manifested itself as poor user experience for users on 7digital.com site, who would be greeted by this message:

This used to be a frequent experience for users, caused by inconsistencies between the search data store and the catalogue data store

This was due to the platform having a separate search database and a catalogue database, which contained different results for the same content. The search database was updated in a different manner to the catalogue database and they were not always consistent with one another. More on that later.

Why was search so slow?

The meta data of the tracks was stored in a search index that was 660Gb in size, which is extremely large compared with many search indexes. Various tweaks to JVM and memory settings were made but they did not make a permanent improvement for very long.

660 Gb is very large for an Lucene search index. Why was it so big?

The Index size was 660Gb, containing 660,000,000 documents. Each document represented all the meta data for a single track.

It’s slow, so why not improve the hardware it was running?

A copy of the SOLR Index was replicated onto four physical servers, 24 cores each, 128 Gb RAM with 1.9TB storage on SSD discs. The server hardware the SOLR servers ran on were upgraded, initially from smaller virtual machines to larger ones, and then later on physical hardware. This was not cost effective given the response time performance we had!

If 7digital ‘s catalogue contains 27 million tracks, and one document represents a single track, then why were there 660 million documents in the search index?

According to the 7digital API documentation, here’s what a track/search response from the 7digital API looks like: http://api.7digital.com/1.2/track/search?q=Happy%20pharrell&oauth_consumer_key=YOUR_KEY_HERE&country=GB&pagesize=2

In the response there are 29 fields representing a track. Every one of the fields in the API response was stored in the Lucene search index. However a search will only match search terms against fields such as track title or artist name, rather than bit rate or image url.

The structure of the track index was such that the data was denormalised. A track could be available in any of the 44 territories 7digital operate in. There would be one track document for each track, multiplied by the number of territories the track was available in. In order to make a single track searchable in all territories there would be 44 documents added to the index. Almost all the field values in those a track documents for a given track would be identical, apart from fields specific to a territory like the price, currency and release date.

The reason for the huge search index size was that it was being used as a document store as well as search index. It worked accurately as a document store, but poorly as a search index for reasons of performance.

Unlike some other nosql use cases, there were almost always more tracks being added and an hourly basis. and very few being removed; every day sees more music released worldwide.

How were these 27 million tracks turned into a searchable index?

Updates to the index were incremental, minute by minute changes to the catalogue were sent via http to the master SOLR server which turned the data into a single index. This master server did not serve any search queries. That work was down by four other SOLR servers which periodically copied the index from the master SOLR server. These four servers are known as Slaves.

Replication from the master SOLR server to the slaves would take around 30-60 minutes, depending on the volume of changes.

Due to the incremental updates to the Lucene index, the number of track documents increased on hourly basis. Within the Lucene index the maxDocument count exceeded the number of searchable documents by a large margin. In Lucene, maxDocument count is simply the number of documents in the index, but this includes deleted ones too, which will not be returned in a search result. 30% of the 660Gb index contained deleted, non-searchable documents. The deleted documents used disc space and hurt search performance.

On a previous occasion in 2013 running a Lucene optimize command on this Lucene index took over 24 hours and prevented any new tracks to be searchable. Doing this regularly was not possible if the organisation needed updates on an minute by minute basis.

The deleted documents remained in the search index, gradually over time the index was getting bigger. In six months it increased by 160Gb.

So what was the problem with updating the index with new tracks as they were imported into 7digital’s platform, incrementally?

The pain points of a 660 Gb index, updated incrementally were:

- Data consistency! The delay between a track changes made on the source SQL database and the time it took to propagate into the SOLR slave servers would be at least two hours. There were many times when the change would be effected in the SOLR index, meaning resulting in poor user experience.

- It was slow to update larger volumes of changes. However, if there were less than a few hundred new tracks to add then updates were fairly speedy (within a few minutes);

- The index could not fit in memory of it’s servers as it exceeded 128Gb RAM available, which resulted it being read from the hard disc which is an order of magnitude slower;

- Since there was a unpredictable number of new tracks being added to the master index, a new version of the index was hourly replicated to the slave servers. Each time happened it caused any SOLR caching to be invalidated;

- Each cache invalidation triggered a Java JVM garbage collection process which was resource intensive and chewed up memory and CPU, resulting in slow response times and SOLR server connection time outs. Many searches would simply fail and return a 503 HTTP error response;

- Occasionally the index contained erroneous data which would have to manually updated by using a curl command, which was risky;

- Serving traffic from such a large index required a lot of hardware hungry;

- It was expensive to host this index in an virtualized environments, for example just one of the slave servers was similar in specification to an i2.8xlarge AWS instance

Why not create the entire index all at once, rather than sending constant updates? Wouldn’t that reduce the index size and help performance?

Yes it would. This was attempted in 2013 by it took around five days to complete, during which this time no new tracks could be added to the search engine. The alternative is was to acquire another large server with a Terrabyte of storage space and index the track data to there, but this still took five days. At the end of that process you would have an index with no deleted documents, yet it would be nearly a week out of date. This was clearly not acceptable for a fast moving multinational music market.

Now I know why search was so slow. Why were the search results often wrong?

The data returned from the search result was retrieved entirely from an single document in the Lucene index. Almost all of the other non search catalogue API endpoints were querying a different SQL database, which was the original data source for metadata. Given the time it took for the data to be read from the original SQL database, posted into the SOLR master server and then replicated to the slave servers, there would often be a gap in time before the two databases were consistent with each other. Occasionally a update would not make it to the SOLR slaves, and there search API would return stale results which would have to be manually updated.

Sounds like a nightmare. How did you solve these problems?

It was! Now we’ve adequately described the problem, my next post will explain how we both fixed the data consistency problem and also improved search response times by 88%